Islet is an open-source software project written in Rust that enables confidential computing on ARM architecture devices using the ARMv9 CCA. The primary objective of Islet is to enable on-device confidential computing and protect user privacy on end user devices.

While current confidential computing solutions mainly focus on server-side protection, it is equally important to safeguard user information at the user device level since that is where private data collection initially occurs. Furthermore, as more and more users rely on privacy apps such as private messengers, secure emails, password managers, and web browsers with privacy settings, there is a growing need to ensure privacy on user devices. Islet, an open-source project, addresses this need by providing a platform for ARM-based confidential computing.

Enabling CC on user devices will not only establish end-to-end CC throughout the entire data processing path, but it will also help create a secure computation model that enables processing of user private data on the user device using the same components that previously were employed at the server side without disclosing business logic. Furthermore, on-device confidential computing will be a key enabler for machine-to-machine computing without the need for server intervention

Feature Overview

- Realm Management Monitor

- Hardware Enforced Security

- Confidential Computing API Standardization

- Automated Verification

- Use case : Confidential Machine Learning

Overall Architecture

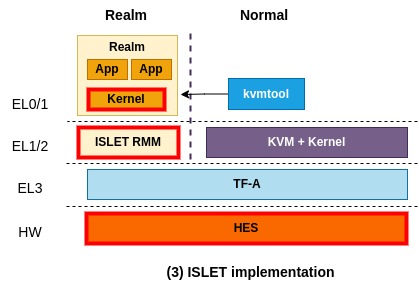

Islet provides a platform for running virtual machines (VMs) confidentially, with standard SDKs for easy integration with other confidential computing frameworks at upper layers. The platform consists of two key components: the Islet Realm Management Monitor (Islet-RMM) and Islet Hardware Enforced Security (Islet-HES).

Islet RMMoperates at EL2 in the Realm world on the application processor cores and manages the confidential VMs, known as realms.- On the other hand,

Islet HESperforms device boot measurement, generates platform attestation reports, and manages sealing key functionality within a secure hardware IP apart from the main application processor.

In designing Islet, we aim to to address the current security challenges in confidential computing technologies right from the very beginning. To ensure that our software is built with safety in mind, we have chosen to use the Rust programming language, known for its unique security model that ensures memory safety and concurrency safety. Moving forward, we also plan to incorporate formal verification techniques to further enhance the security of our design and implementation.

For more information, please visit our developer site.

A demo video (Confidential ML)

- This video shows how Islet achieves an end-to-end confidential machine learning with a chat-bot scenario.

- This video flows as follows.

- It starts with a slide that describes all components involved in this demo. All components will run on confidential computing platforms.

- (feed an ML model) The model provider feeds the ML model into the ML server. This is done through a secure channel established with the aid of the certifier framework.

- (run a coding assistant) A mobile device user asks a chat-bot application that runs on Islet for generating a function. And then, that request is passed on to the ML server through a secure channel. Finally, the user can see the result (i.e., function).

- (launch a malicious server) This time, we launch a malicious server to show a failure case. When it attempts to join the certifier service (on the right side of the screen), it will not pass authentication as it results in a different measurement. Therefore, the malicious server cannot interact with the mobile device user in the first place.

- To download this video, click here.

Contents

ARM Confidential Compute Architecture (CCA)

ARM CCA is the latest confidential computing technology that can extend confidential computing through to mobile devices (i.e., Samsung galaxy). For ARM-based devices, TrustZone has been the pillar of secure compute for over a decade and adopted for various use cases. However, one weakness of TrustZone makes it hard to keep up with an increasing number of applications that want to benefit from TrustZone. That is the lack of dynamic yet flexible memory allocation strategy.

To isolate TrustZone from normal worlds (non-secure worlds), hardware manufacturer like Samsung have had to dedicate some portion of physical memory to TrustZone, which raises a conventional memory-related tradeoff. To be fair, it's not a problem that only belongs to TrustZone, some other TEEs (e.g., SGX) also suffer from it. And this is one of the reasons why recent confidential computing architectures take secure virtual machine approach (e.g., AMD SEV, Intel TDX, ARM CCA) over process-based ones (e.g., Intel SGX).

From the hardware manufacturer perspective, the capability of dynamic secure memory allocation is definitely the most appealing feature among others. But, this is not the only thing Islet is excited about. Islet can benefit from ARM CCA in many aspects that include but not limited to:

- dynamic secure memory allocation, which allows more secure applications to coexist with non-secure applications.

- attestation, which allows other entities (e.g., service provider) to easily verify applications running on mobile devices, which in turn making things easier to build complex trustworthy services.

- device protection, which could be accomplished by a so-called secure virtualization as specified in this blog post.

On top of the above features, what's interesting to Islet is that CCA leaves the role of implementing key components that act as TCB (Trusted Computing Base) to manufacturers. In other words, hardware vendors can augment CCA to solve their unique challenges as long as their implementations adhere to the CCA specification. This flexibility would get significantly important considering updates when a new threat to confidential computing emerges. For example, there had been a lot of side-channel attacks targeting Intel SGX. However, since Intel SGX puts all security-related codes in hardware, such attacks couldn't be mitigated by platform updates, demanding updates on a per-application basis.

We believe that Islet takes advantages of strong features of CCA while augmenting CCA in many aspects to get to the point where mobile device users truly get a great security experience.

Application Developer

Application developers are who want to develop Confidential Applications. Confidential Application is kind of application running on Confidential Computing.

We provides Islet SDK which supports to build Confidential Applications.

Islet SDK provides Confidential Computing API (Attestation, Sealing).

You can run Confidential Applications not only on Arm FVP(arm64)

but also on Host PC(x86_64, simulated version) with Islet SDK.

For more information about Islet SDK,

please refer this document.

Setting Rust environment

The first step is to prepare Rust environment.

$ ./scripts/deps/rust.sh

Run the example app with SDK

You can easily explore confidential computing APIs on your x86_64 host machine.

Islet SDK provides code examples and the build script.

$ cd sdk

$ make run-simulated

# Islet SDK examples: A simulated app running on x86_64

Simulated attestation operation on x86_64.

Verify Realm Signature.

== Signature Verification:

Sign Algo = [ES384]

Public Key = ["0476f988091be585ed41801aecfab858548c63057e16b

Data = ["846a5369676e61747572653144a1013822405901b6a70

Signature = ["ec4f3b28a00feabd1f58f94acb27fdc7957545409f1c9

== End of Signature Verification

...

Attestation result Ok(())

Sealing result Ok(())

Example code snippet

Below is code snippet of the example. You can refer the whole example code.

#![allow(unused)] fn main() { use islet_sdk::prelude::*; // Attestation let user_data = b"User data"; let report = attest(user_data)?; let claims = verify(&report)?; println!("Debug: {:?}", claims); // Sealing let plaintext = b"Plaintext"; let sealed = seal(plaintext)?; let unsealed = unseal(&sealed)?; assert_eq!(plaintext, &unsealed[..]); }

Platform Developer

Platform developers are who want to develop Confidential Computing Platform Components. Platform components include from Realm Management Monitor(RMM) to Realm.

Islet provides Rust-based RMM and scripts to compose Confidential Computing Platform.

You can explore CCA platform with our scripts and

powerful third-party projects.

Setting build environment

The first step is to prepare to build our project.

./scripts/init.sh

Running a linux realm

// Start FVP on host

$ ./scripts/fvp-cca --normal-world=linux --realm=linux --rmm=islet

// Run a linux realm on fvp

$ ./launch-realm.sh

Running SDK sample apps after running a linux realm

// Move to shared dir on realm

$ cd /shared

// Insert RSI kernel module

$ insmod rsi.ko

// Run the sample app (rust)

$ ./sdk-example

// Run the sample app (c)

$ LD_LIBRARY_PATH=./ ./sdk-example-c

Running a linux realm with a networking support and prebuilt examples

See examples. To get details about its network configuration, see network.md

Testing the realm features

// Start FVP on fvp

$ ./scripts/fvp-cca --normal-world=linux --realm=linux --rmm=islet

// Test the realm features on fvp

$ ./test-realm.sh [attest]

Testing RMMs with tf-a-tests

# Islet RMM

$ ./scripts/fvp-cca --normal-world=tf-a-tests --rmm=islet

# TF RMM

$ ./scripts/fvp-cca --normal-world=tf-a-tests --rmm=tf-rmm

Testing RMMs with ACS

# Islet RMM

$ ./scripts/fvp-cca --normal-world=acs --rmm=islet

# TF RMM

$ ./scripts/fvp-cca --normal-world=acs --rmm=tf-rmm

Network configuration

Enable the capability of networking

In the environment of FVP-based emulation, there are many components involved so enabling a network is not an easy task. The three components involved are:

- (1) PC Host (Ubuntu only supported at this time of writing), which tries to launch FVP Host.

- (2) FVP Host, which is going to be running as a guest machine of PC Host.

- (3) Realm, which is going to be launched by FVP Host and acts as a guest to FVP Host.

In our network configuration, each of the three has different static IP address so that they can communicate with each other by specifying a proper destination IP address. Under this setting, any of the three can act as either server or client.

And here is how to make "FVP Host and Realm" capable of communicating through to PC Host.

First, make sure you are in the root directory of Islet and go through the following instructions.

In most cases, it would be sufficient to use a default configuration but --host-ip and --ifname.

# full command:

# ./scripts/fvp-cca --normal-world=linux-net --realm=linux --rmm=islet --hes --no-telnet --rmm-log-level=info --ifname=<the network interface name in your PC host> --host-ip=<the IP of your PC host>

$ ./scripts/fvp-cca --normal-world=linux-net --realm=linux --rmm=islet --hes --no-telnet --rmm-log-level=info --ifname=enp3s0 --host-ip=111.222.333.15

# this takes a default network configuration in which

# --host-ip: put in the IP address of your PC Host (111.222.333.15)

# --ifname: put in the network interface name of your PC Host (enp3s0)

# --host-tap-ip: 193.168.10.1 (default value)

# --fvp-ip: 193.168.10.5 (default value)

# --fvp-tap-ip: 193.168.20.1 (default value)

# --realm-ip: 193.168.20.10 (default value)

# --route-ip: 193.168.20.0 (default value)

In this setting, both FVP Host and Realm are able to connect to external networks (i.e., internet) through PC Host's network interface you specify through --ifname.

A closer look at network configuration

This is how the aforementioned three components interact with each other:

// A default configuration

// Realm: IP: 193.168.20.10 (static address), Gateway: 193.168.20.1 (the tap device of FVP Host)

// FVP Host: IP: 193.168.10.5 (static address), Gateway: 193.168.10.1 (the tap device of PC Host)

// PC Host: IP: 111.222.333.15 (a real IP address + tap device + Source NAT)

Realm <----------------> FVP Host <-----------------> PC Host

(tap network) (ipv4_forward) (tap network)

Verification

We formally verify Islet using Kani's model checking. Our verification harnesses adopt the same input and output conditions as well as similar structures used in TF-RMM's harnesses which are extracted from Machine Readable Specification. It would help to check the conformance of the two systems written in different languages.

Verification dependency

Available RMI targets

rmi_features

rmi_granule_delegate

rmi_granule_undelegate

rmi_realm_activate

rmi_rec_aux_count

rmi_rec_destroy

rmi_version

Verifying Islet

(in islet/model-checking)

# Choose one among the list in `Available RMI targets` for the value of `RMI_TARGET`

$ RMI_TARGET=rmi_granule_undelegate make verify

For more about Islet's model-checking, please refer to here.

Islet Verification

Work done for Islet's Model Checking

Refactoring for Overall State Reduction

Reducing the overall state is a key technique for model checking, which often involves computationally infeasible formulas. For this purpose, we have refactored Islet in the following ways: Array-based Granule Status Table, Integration of Realm and RD as well as VCPU and REC, and Constraint Retrieval Modification.

-

Array-based Granule Status Table

We maintain a page table-based Granule Status Table in order to reduce the overall memory consumption in Islet. This data structure, while being beneficial in terms of memory usage, was a main obstacle in modeling checking Islet, because using it would cause a state explosion. To address this issue, we added an array-based Granule Status Table which could be selectively chosen with conditional compilation. The intention is for reducing the overall complexity of page table-based one, helping to reason about the implementation.

When the array-based Granule Status Table is chosen, the call sequence could be largely simplified from the caller's perspective like the below, reducing the overall state in model checking.

#![allow(unused)] fn main() { [before] // Previously, the below match was used to expand the page table entry. let mut granule = match get_granule_if!(addr, GranuleState::Undelegated) { Err(MmError::MmNoEntry) => set_state_and_get_granule!( addr, GranuleState::Undelegated) other => other, }?; [after] // The match is no longer used, as the array will not be expanded. let mut granule = get_granule_if!(addr, GranuleState::Undelegated)?; } -

Integration of Realm and RD

Before the refactoring, Realm and RD (Realm Descriptor) had almost the same purpose and overlapped functionalities, increasing the overall complexity in verifying the system. To address this issue, we changed to keep only RD which is defined by spec.

The summary of changes is like the below.

#![allow(unused)] fn main() { -pub struct Realm { - id: usize, - pub vmid: u16, - pub state: State, - pub vcpus: Vec<Arc<Mutex<VCPU>>>, - pub page_table: Arc<Mutex<Box<dyn IPATranslation>>>, - pub measurements: [Measurement; MEASUREMENTS_SLOT_NR], -} pub struct Rd { - realm_id: usize, + vmid: u16, ... + s2_table: Arc<Mutex<Box<dyn IPATranslation>>>, hash_algo: u8, + pub measurements: [Measurement; MEASUREMENTS_SLOT_NR], + pub vcpus: Vec<Arc<Mutex<VCPU>>>, } }With the change, the call sequence could be largely simplified from the caller's perspective, reducing the overall state in model checking.

#![allow(unused)] fn main() { [before] let (s2tte, last_level) = get_realm(realm_id) .ok_or(Error::RmiErrorOthers(NotExistRealm))? .lock() .page_table .lock() .ipa_to_pte(GuestPhysAddr::from(ipa), level) .ok_or(error_code)?; [after] let (s2tte, last_level) = rd .s2_table() .lock() .ipa_to_pte(GuestPhysAddr::from(ipa), level) .ok_or(error_code)?; }#![allow(unused)] fn main() { [before] let measurements = crate::realm::registry::get_realm(realmid) .ok_or(Error::RmiErrorOthers(NotExistRealm))? .lock() .measurements; [after] let measurements = rd.measurements; } -

Integration of VCPU and REC

Before the refactoring, VCPU and REC (Realm Execution Context) had almost the same purpose and overlapped functionalities, increasing the overall complexity in verifying the system. To address this issue, we changed to keep only REC which is defined by spec.

The summary of changes is like the below.

#![allow(unused)] fn main() { -pub struct VCPU { - pub context: Context, - pub state: State, - pub pcpu: Option<usize>, - me: Weak<Mutex<Self>>, -} +[repr(C)] pub struct Rec<'a> { + pub context: Context, attest_state: RmmRecAttestState, ... - state: RecState, + state: State, ripas: Ripas, vtcr: u64, host_call_pending: bool, } }With the change, the call sequence could be largely simplified from the caller's perspective, reducing the overall state in model checking.

#![allow(unused)] fn main() { [before] let pa_offset = get_reg(rd, vcpuid, 2)?; let buffer_size = get_reg(rd, vcpuid, 3)?; [after] let pa_offset = get_reg(rec, 2)?; let buffer_size = get_reg(rec, 3)?; }#![allow(unused)] fn main() { [before] pub fn get_reg(rd: &Rd, vcpu: usize, register: usize) -> Result<usize, Error> { ... let value = rd .vcpus .get(vcpu) .ok_or(Error::RmiErrorOthers(NotExistVCPU))? .lock() .context .elr; ... } [after] pub fn get_reg(rec: &Rec<'_>, register: usize) -> Result<usize, Error> { ... let value = rec.context.elr; ... } } -

Constraint Retrieval Modification

We refactored constraint retrieval procedure of RMI and RSI. The main motivation of the refactoring is that insertion and get in the map are very expensive operations in the formal semantic and thus avoiding them is better if possible. It is also beneficial for runtime performance, as the previous insertions are no longer involved. The change assumes that the configuration regarding constraints does not change at runtime.

#![allow(unused)] fn main() { [before] lazy_static! { static ref CONSTRAINTS: BTreeMap<Command, Constraint> = { let mut m = BTreeMap::new(); m.insert(rmi::VERSION, Constraint::new(rmi::VERSION, 1, 1)); ... (caller) if let Some(c) = CONSTRAINTS.get(&cmd) { [after] fn pick(cmd: Command) -> Option<Constraint> { let constraint = match cmd { rmi::VERSION => Constraint::new(rmi::VERSION, 1, 1), ... (caller) if let Some(c) = pick(cmd) { } -

The Summary of Refactoring

Table 1 summarizes the number of physical lines of code involved by the refactoring.

The Type of Refactoring Addition Deletion Total Array-based Granule Status Table 621 367 988 Integration of RD and Realm 342 442 784 Integration of VCPU and REC 311 564 875 Constraint Retrieval Modification 49 133 182 Total 1323 1506 2829 Table 1. Line Counts of the Refactoring

Writing Harnesses

A harness is an essential component in model checking as it provides the entry point and prepares for the system execution (e.g., global state initialization). It acts as a bridge between the model checker and the system under verification, allowing the model checker to access the the system's inputs and outputs. The harness ensures that the correct input values are provided to the system and captures the corresponding output responses. Also, the harness can contain the behaviors of the system to validate.

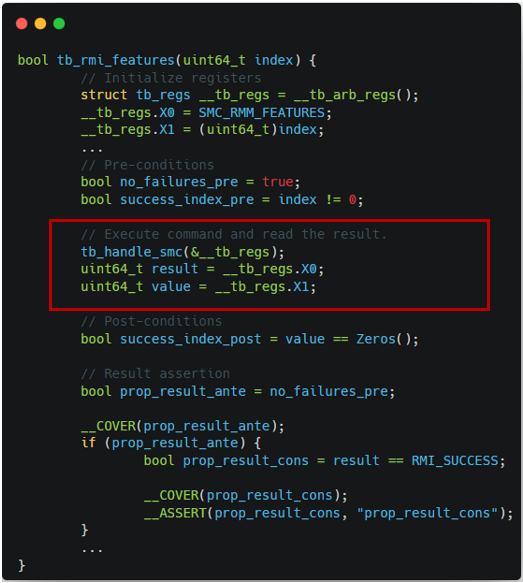

In writing harnesses, we adopt the same input and output conditions as well

as similar structures used in TF-RMM's

harnesses which are extracted from Arm's Machine Readable Specification.

We use this approach, because it would help to check the conformance of the

two systems, namely Islet and TF-RMM, written in different languages: Rust and C.

While using the similar structures, we also preserve Islet's unique characteristics

as much as possible. For example, we maintain two different versions of Islet's

Mainloop and Monitor (one for verification and the other for execution),

so that harnesses can help determine their target ABI's input and output

through Monitor's argument and return value as shown in Figure 1-2.

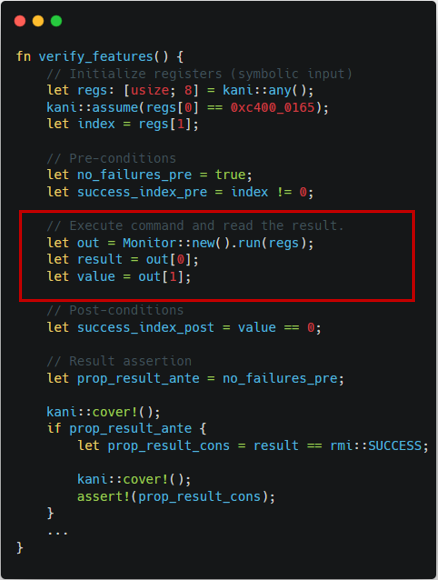

The other examples of harnesses can be also found in Figure 2-1 and Figure 2-2,

which describe the harnesses for RMI granule undelegate.

|  |

|---|---|

| Figure 1-1. TF-RMM features harness | Figure 1-2. Islet features harness |

|  |

|---|---|

| Figure 2-1. TF-RMM granule undelegate harness | Figure 2-2. Islet granule undelegate harness |

Embeding Invariants

Formal verification can help to prove interesting invariants to be held

in the entire states. We embed the invariants with is_valid() predicate

in target data structures. For example, the below is the specification

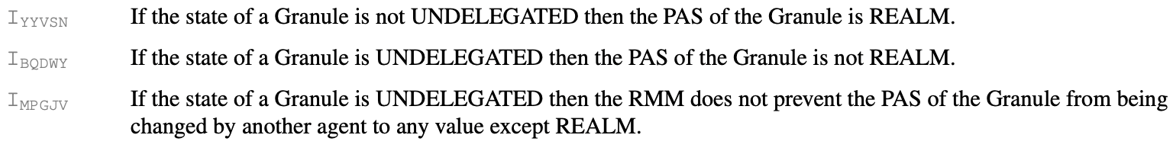

about granule's attributes.

Using the specification's conditions, is_valid() predicate can be

defined in Granule and Granule Status Table respectively.

The added predicate ensures that all granule entries in Granule Status

Table are in valid status conforming to the specification.

#![allow(unused)] fn main() { impl Granule { ... #[cfg(kani)] pub fn is_valid(&self) -> bool { self.state >= GranuleState::Undelegated && self.state <= GranuleState::RTT && // XXX: the below condition holds from beta0 to eac4 if self.state != GranuleState::Undelegated { self.gpt == GranuleGpt::GPT_REALM } else { self.gpt != GranuleGpt::GPT_REALM } } } }

#![allow(unused)] fn main() { impl GranuleStatusTable { ... #[cfg(kani)] pub fn is_valid(&self) -> bool { self.entries .iter() .fold(true, |acc, x| acc && x.lock().unwrap().is_valid()) } } }

Environment Modeling

Model checking requires an environment model to accurately analyze and verify system behaviors. By explicitly specifying the environment in which a system operates, one can ensure that all possible interactions between the system and its surroundings are considered during verification. During Islet's model checking, we have modeled EL3 Monitor and Host Memory to help verify Islet.

-

EL3 Monitor Modeling

Background: RMM interacts with EL3 monitor for privileged operations (e.g., Granule Partition Table change). While RMM itself is the target of verification and is translated by model checker, RMM's environment such as EL3 monitor is beyond the scope of verification, thus should be properly modeled during verification.

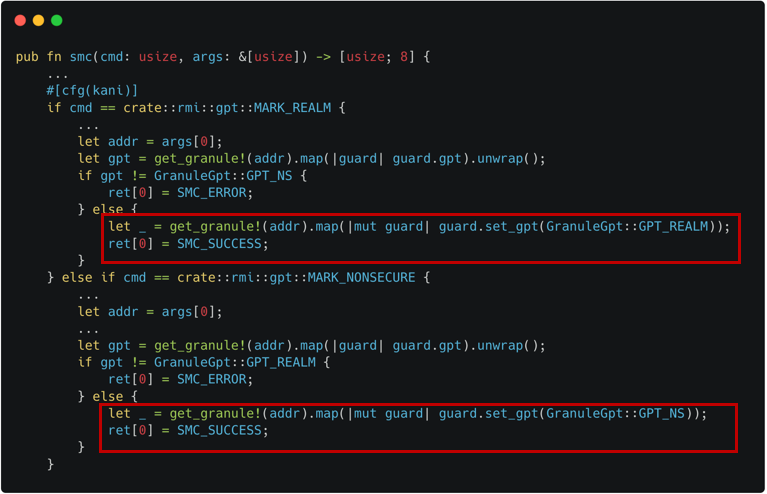

The approach we use: We insert a ghost

gptfield in a granule's entry for tracking GPT's change as shown in Figure 3-1. We also embed EL3's GPT handling code in SMC invocation as shown in Figure 3-2.

|  |

|---|---|

| Figure 3-1. Ghost gpt field for EL3 Modeling | Figure 3-2. GPT Handling for EL3 Modeling |

-

Host Memory Modeling

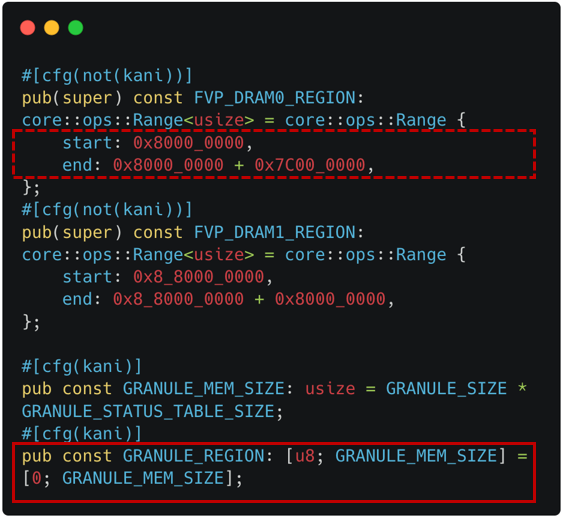

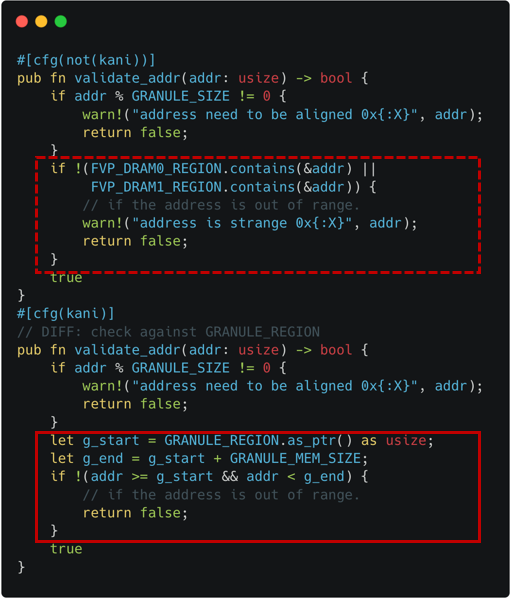

Background: The Host delegates and undelegates is memory for Realm World's usage. To verify RMM, the memory region should be properly modeled.

The approach we use: We model the Host memory as a pre-allocated memory region, because it helps to avoid a false-positive related to invalid memory accesses. Also, instead of using the same starting address (e.g., 0x8000_0000), we use a mock region filled with non-deterministic contents. It helps to address an issue related to the backend CBMC's pointer encoding where the first 16 bits are used for a pointer object's identifier.

The relevant implementation of the Host memory is shown as dotted line in Figure 4-1, while the pre-allocated mock region as solid line. The checking logic regarding valid memory range is also adjusted from dotted line (original check) to solid line (modeled check) in Figure 4-2.

|  |

|---|---|

| Figure 4-1. Host Memory Modeling (Declaration) | Figure 4-2. Host Memory Modeling (Check) |

Results

Steps to Reproduce

-

Verification Dependency

-

Available RMI targets

rmi_features rmi_granule_delegate rmi_granule_undelegate rmi_realm_activate rmi_rec_aux_count rmi_rec_destroy rmi_version -

Verifying Islet

(in islet/model-checking) # Choose one among the list in `Available RMI targets` for the value of `RMI_TARGET` $ RMI_TARGET=rmi_granule_undelegate make verify

Bugs Found

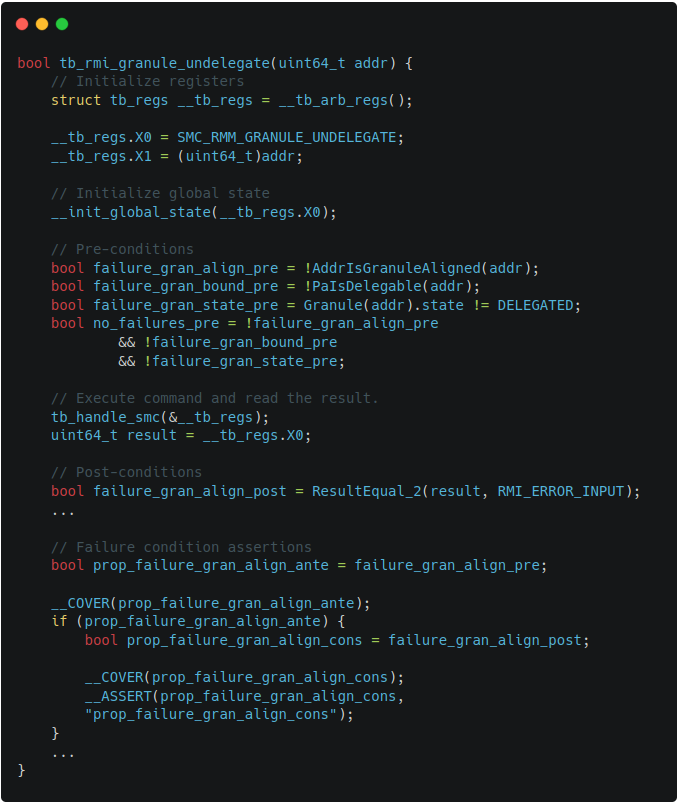

We found four correctness bugs and one security bug during Islet's model checking.

-

RMI Features

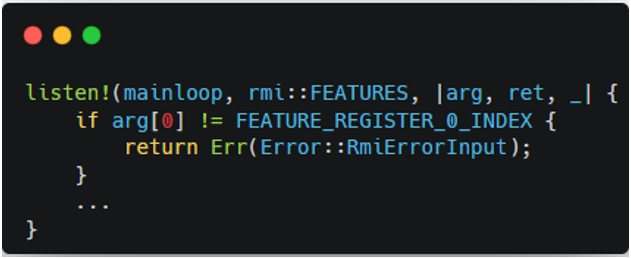

Figure 5-1 indicates the previous implementation of RMI features. When this code was passed as an input, the verification failed. It was because the code was violating the failure conditions of RMI features as shown in Figure 5-2. After fixing the line returning the error condition, the verification becomes successful.

|  |

|---|---|

| Figure 5-1. Buggy RMI Features Code | Figure 5-2. Failure Conditions of RMI features |

-

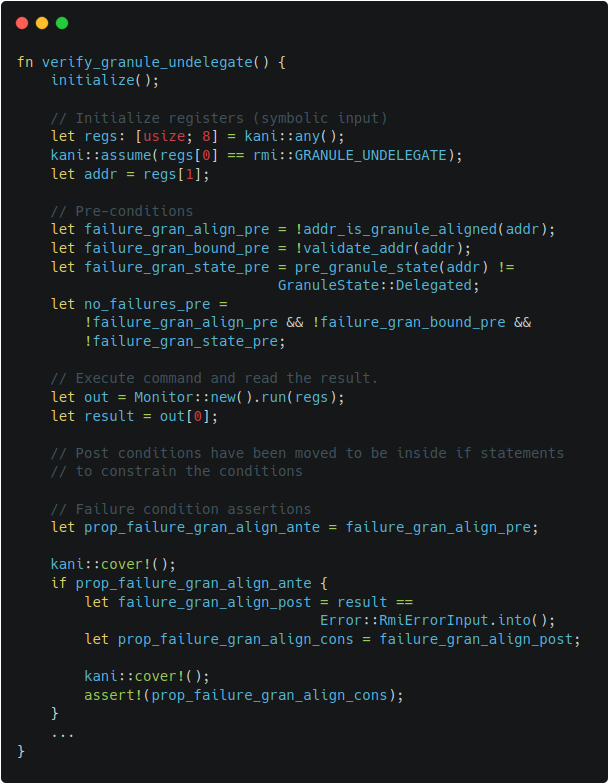

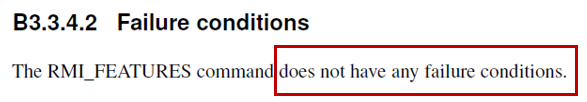

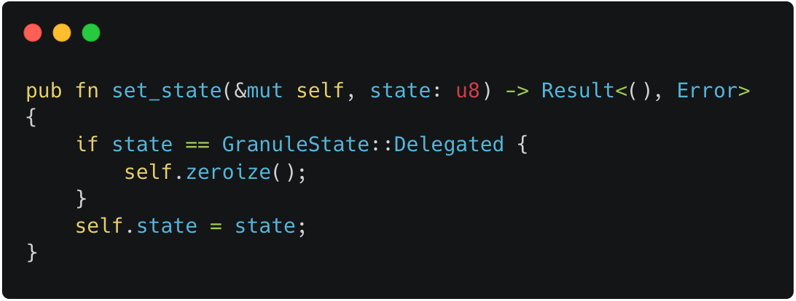

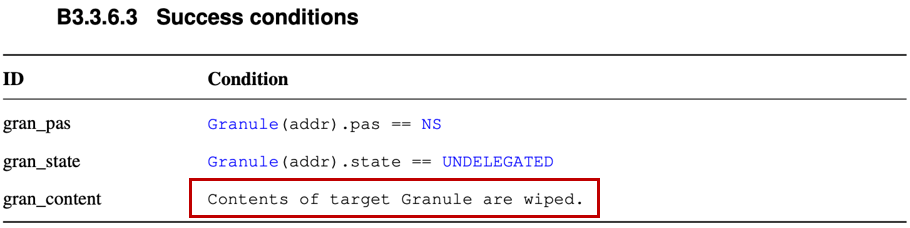

RMI Granule Undelegate

Figure 6-1 incidates the previous implementation of

set_state()which modifies the state of the target granule. It is invoked by several ABIs including RMI granule undelegate. When this code was used, the verification for RMI granule undelegate failed. It was because the code was violating the success conditions of RMI granule undelegate as shown in Figure 6-2. We fixed the code to zero-out the contents not only in the delegated state but also in the undelegated state, turning the verification results successful. Note that without the patch, it could have left sensitive information on the target granule, which might be obtained by attackers.

Figure 6-1. Buggy RMI Granule Undelegate Code

Figure 6-2. Success Conditions of RMI Granule Undelegate -

RMI Rec Destroy

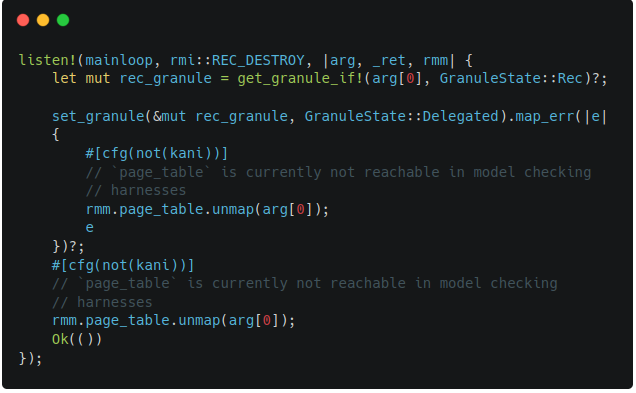

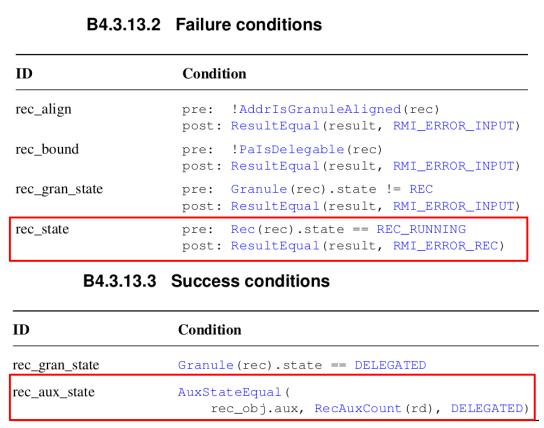

Figure 7-1 indicates the previous implementation of RMI rec destroy. When this code was passed as an input, two verification conditions failed. It was because the code was violating the failure condition as well as the success condition of RMI rec destroy as shown in Figure 7-2. After adding the sanitization logic and granule setting code, the verification becomes successful.

|  |

|---|---|

| Figure 7-1. Buggy RMI Rec Destroy Code | Figure 7-2. Conditions of RMI Rec Destroy |

-

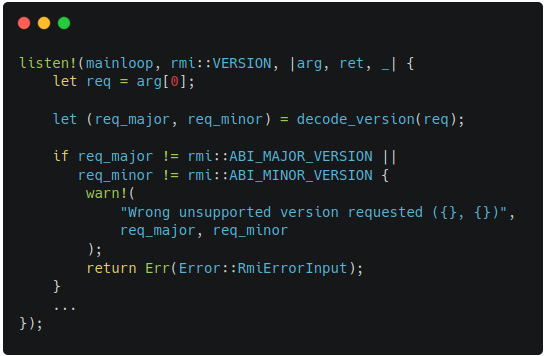

RMI Version

Figure 8-1 indicates the previous implementation of RMI version. When this code was passed as an input, the verification failed. It was because the code was not satisfying the conditions for output values (lower and higher) in failure conditions of RMI version as shown in Figure 8-2. After setting the output values beforehand, the verification becomes successful.

|  |

|---|---|

| Figure 8-1. Buggy RMI Version Code | Figure 8-2. Outputs of RMI Version |

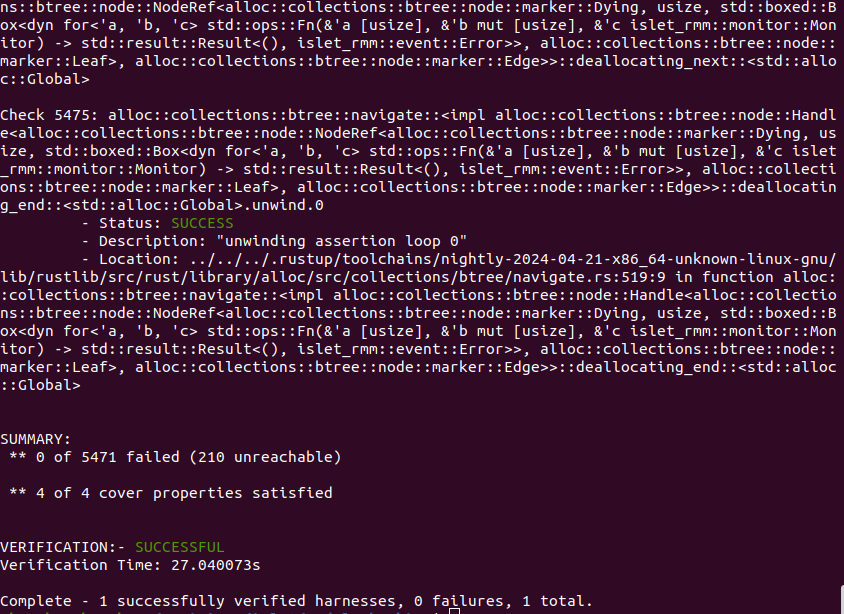

Verification Output

-

RMI Features

Figure 9-1 shows the output of Islet's model checking with the target of RMI features. It says that the total of 5271 verification conditions and the total of 4 cover conditions have successfully passed.

Figure 9-1. Model Checking Output of RMI Features -

RMI Granule Delegate

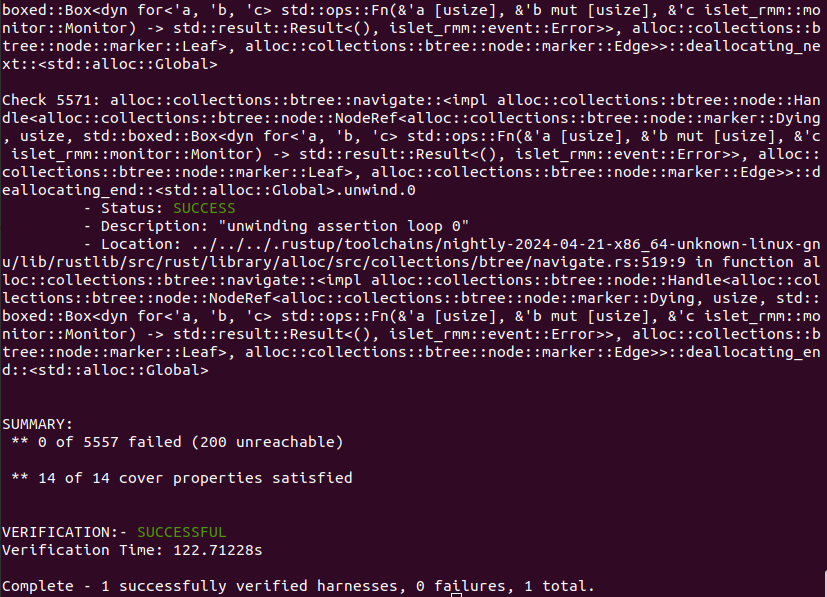

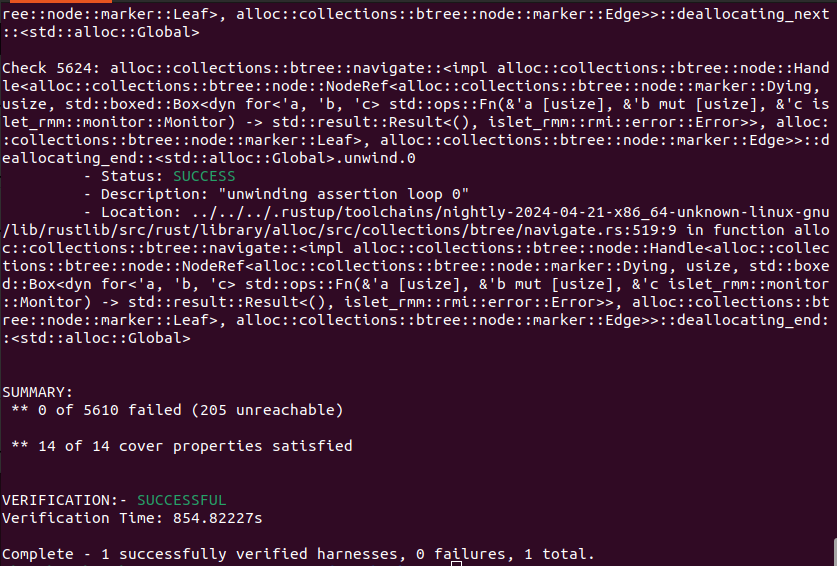

Figure 9-2 shows the output of Islet's model checking with the target of RMI granule delegate. It says that the total of 5557 verification conditions and the total of 14 cover conditions have successfully passed.

Figure 9-2. Model Checking Output of RMI Granule Delegate -

RMI Granule Undelegate

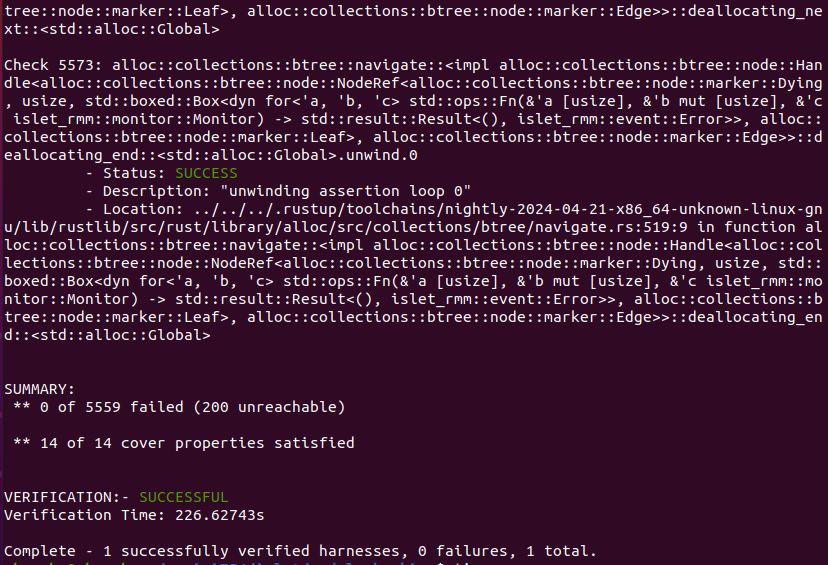

Figure 9-3 shows the output of Islet's model checking with the target of RMI granule undelegate. It says that the total of 5559 verification conditions and the total of 14 cover conditions have successfully passed.

Figure 9-3. Model Checking Output of RMI Granule Undelegate -

RMI Realm Activate

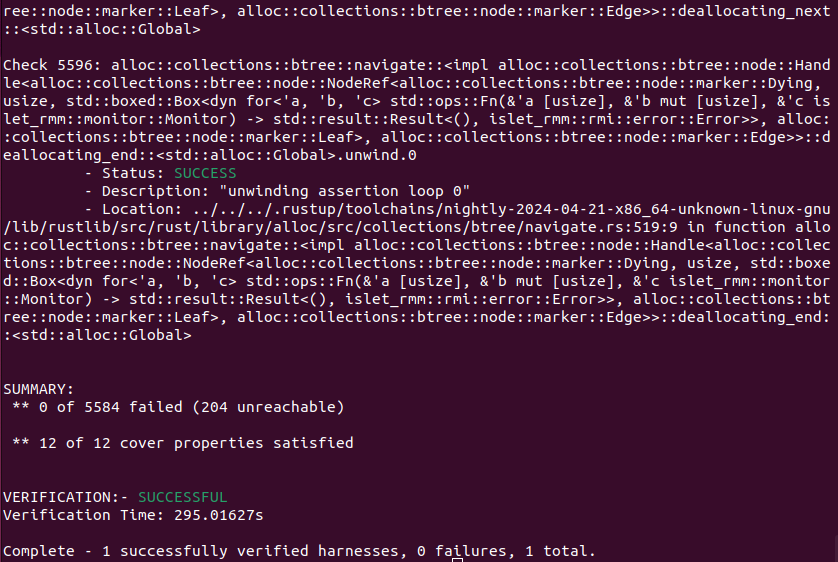

Figure 9-4 shows the output of Islet's model checking with the target of RMI realm activate. It says that the total of 5584 verification conditions and the total of 12 cover conditions have successfully passed.

Figure 9-4. Model Checking Output of RMI Realm Activate -

RMI Rec Aux Count

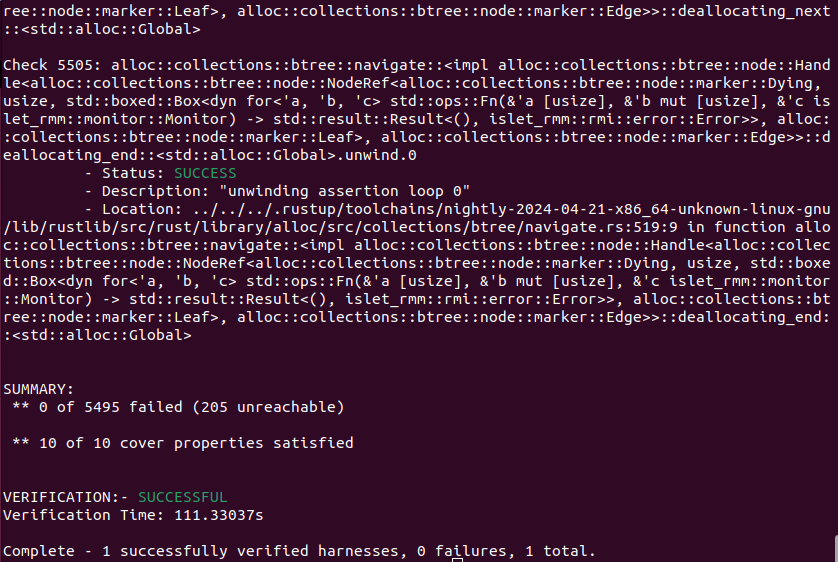

Figure 9-5 shows the output of Islet's model checking with the target of RMI rec aux count. It says that the total of 5495 verification conditions and the total of 10 cover conditions have successfully passed.

Figure 9-5. Model Checking Output of RMI Rec Aux Count -

RMI Rec Destroy

Figure 9-6 shows the output of Islet's model checking with the target of RMI rec destroy. It says that the total of 5610 verification conditions and the total of 14 cover conditions have successfully passed.

Figure 9-6. Model Checking Output of RMI Rec Destroy -

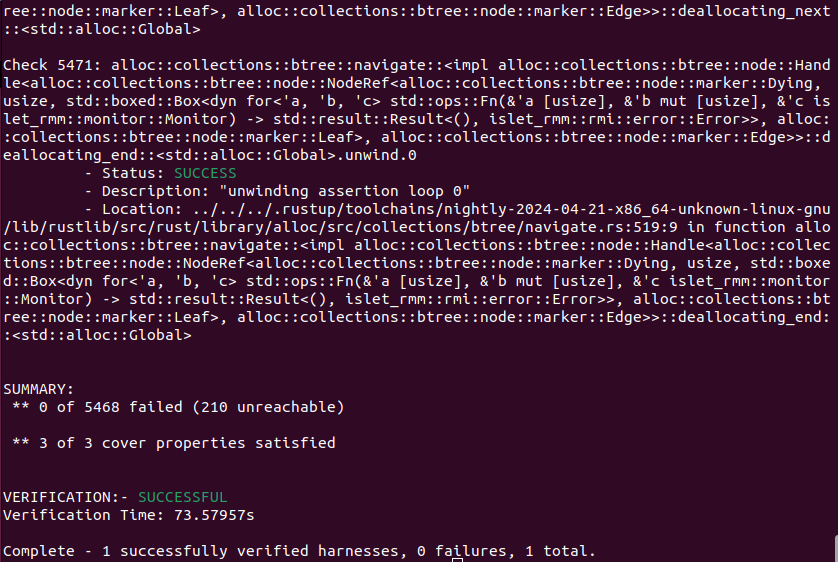

RMI Version

Figure 9-7 shows the output of Islet's model checking with the target of RMI version. It says that the total of 5468 verification conditions and the total of 3 cover conditions have successfully passed.

Figure 9-7. Model Checking Output of RMI Version

Contents

- 2.1. CCA platform architecture

- 2.2. Realm Management Monitor

- 2.3. Command Line Interface

- 2.4. Software Development Kit

- 2.5. Attestation

- 2.6. Certifier

Platform architectures

This page aims to describe an overall CCA platform architecture and what components Islet is going to make a valuable addition to as a manufacturer.

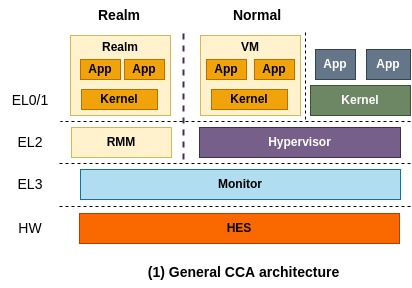

The general CCA architecture

The general CCA architecture is depicted above. From the high level perspective, you think of this architecture as the one similar to a conventional TrustZone application programming model where one application breaks down into two pieces, one for normal world and the other one for secure world. More precisely, a virtual machine that runs on the normal world can securely delegate confidential operations to a corresponding realm.

You might not want to split up an application into two pieces. Instead, you may want to put a whole application in Realm and run it without code changes as confidential container does. That scenario can also be realized and is specified in the next section.

In this architecture, RMM (Realm Management Monitor) and Monitor (also known as EL3 Monitor, shortly EL3M) are called trusted firmware components that CCA relies on for security, therefore they must be securely implemented and verified. Monitor manages GPT (Granule Protection Table) which tracks which world each physical page belongs to and is responsible for context switching between different worlds (i.e., between Realm world and Normal world).

More specifically, a physical page assigned to a realm is marked as a realm page in GPT and it is used in memory translation. So, when a VM that runs on Normal world attempts to access that page, it will result in a translation fault. This is how CCA offers isolation between different worlds and enables dynamic secure memory allocation.

On top of it, RMM takes the responsibility of isolating a realm from the other realms, by making use of existing virtualization-based isolation technologies such as NPT (Nested Page Table). Also, it controls the execution of Realm as typical hypervisors do and is responsible for interacting with Normal world to provide some services, for example sending a network packet through VirtIO to Normal world.

Lastly, HES (Hardware Enforced Security) represents a dedicated hardware component that is separated from CPUs and behaves as RoT (Root of Trust). Detaching the ability of RoT from Monitor (i.e., firmware that runs on CPU) helps to minimize the responsibility of RMM.

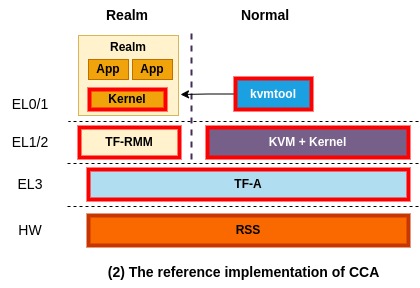

The reference implementation of CCA

ARM has been working hard to bring and merge the reference implementation of CCA into a open-source ecosystem. The above picture depicts the current reference implementation of CCA at the time of this writing. Red boxes represent components that are making up of the reference implementation. They implement and manage TF-RMM and TF-A for RMM and Monitor, respectively, and use them for the reference implementation of CCA. Also, for HES, they implement RSS that runs on a dedicated MCU (Micro Controller Unit) isolated from CPUs.

For a software stack of virtualization, ARM currently uses a combination of KVM and kvmtool which is a light-weight VMM (virtual machine monitor). kvmtool is a tiny VMM written in C and offers basic functionalities to launch and manage a KVM VM, including virtio. To launch a realm, kvmtool takes as input a kernel binary and a root file system and asks TF-A (Monitor) for creating and executing the realm. That request passes through a linux kernel that supports interaction with Monitor.

Lastly, an OS kernel that runs on Realm has to be updated to interact with TF-RMM. For example, a realm can ask TF-RMM for generating an attestation report.

Islet implementation

This picture shows what Islet is working on to augment CCA in the direction we want. The most important part would be Islet RMM which adheres to the specification of RMM but is written in Rust. We choose Rust to benefit from its strong type system and compile-time security, making things easier to reason about the security of complicated software.

In addition to rewriting RMM in Rust, we're planning to put in some new features that are needed to accomplish our goals and could differentiate Islet RMM from TF RMM. Note that what features to add in are still in internal discussion. Also, we're working on designing and developing our own HES tailored for real hardware platforms.

Other than the above components, Islet currently relies on the reference implementation of CCA.

Rust-based Realm Management Monitor

Command Line Interface

Islet provides Command Line Interface (CLI) which can explore CCA operations. CLI supports both x86_64 (simulated) and aarch64.

You can explore Attestation like below

$ cd cli

$ make x86_64

$ ./islet attest --output=./report

$ ./islet verify --input=./report

== Signature Verification:

Sign Algo = [ES384]

Public Key = ["0476f988091be585ed41801aecfab858...]

Data = ["846a5369676e61747572653144a10138...]

Signature = ["ec4f3b28a00feabd1f58f94acb27fdc7...]

== End of Signature Verification

== Realm Token cose:

Protected header = Header { alg: Some(Assigned(ES

Unprotected header = Header { alg: None, crit: [],

Signature = [ec4f3b28a00feabd1f58f94acb27f

== End of Realm Token cose

== Realm Token:

Realm challenge (#10) = [abababababababababababa

Realm personalization value (#44235) = [00000000000000000000

Realm hash algo id (#44236) = "sha-256"

Realm public key hash algo id (#44240) = "sha-256"

Realm signing public key (#44237) = [0476f988091be585ed41

Realm initial measurement (#44238) = [6190eb90b293886c172e

Islet SDK

Islet SDK is an open source SDK which provides confidential computing primitives to enclaves. There are two types of component which can use our API. One is the application type running on EL0. The other is the VM type running on EL1. Islet SDK focuses the application type first. We believe that you can easily migrate the existing applications to the Arm CCA World.

Supported Languages

Islet SDK is written in rust but we also support C/C++.

We use cbindgen which is the powerful tool

to create headers for rust libraries which expose public C/C++ APIs.

+--------+ +---------+

| sdk | => cbindgen => header => | app |

| (rust) | => cdylib => library => | (c/c++) |

+--------+ +---------+

Confidential Computing Primitives

Currently Islet SDK provides Attestation and Sealing. You can check reference code snippets.

Attestation

Rust code snippet

#![allow(unused)] fn main() { use islet_sdk::prelude::*; let user_data = b"User data"; let report = attest(user_data)?; let claims = verify(&report)?; print_claim(&claims); if let Some(ClaimData::Bstr(data)) = parse(&claims, config::STR_USER_DATA) { assert_eq!(user_data, &data[..user_data.len()]); } else { assert!(false, "Wrong user data"); } if let Some(ClaimData::Text(data)) = parse(&claims, config::STR_PLAT_PROFILE) { assert_eq!(data, "http://arm.com/CCA-SSD/1.0.0"); } else { assert!(false, "Wrong platform profile"); } if let Some(ClaimData::Bstr(data)) = parse(&claims, config::STR_REALM_INITIAL_MEASUREMENT) { println!("Realm initial measurement: {:X?}", &data); } else { assert!(false, "Wrong RIM"); } }

C++ code snippet

using byte = unsigned char;

byte report[2048], claims[1024], claim[1024];

int report_len, claims_len, claim_len;

std::string user_data("User Custom data");

if (!islet_attest((const byte*)user_data.c_str(), user_data.size(), report, &report_len))

return -1;

if (!islet_verify(report, report_len, claims, &claims_len))

return -1;

islet_print_claims(claims, claims_len);

const char CLAIM_USER_DATA[] = "User data"; // Claim title

if (!islet_parse("User data", claims_out.data(), claims_out_len, value_out.data(), &value_out_len))

return -1;

printf("Claim[User data]: %s\n", (char*) value);

Sealing

Rust code snippet

#![allow(unused)] fn main() { let plaintext = b"Plaintext"; let sealed = seal(plaintext)?; let unsealed = unseal(&sealed)?; assert_eq!(plaintext, &unsealed[..]); }

C++ code snippet

using byte = unsigned char;

byte sealed[2048], unsealed[2048];

memset(sealed, 0, sizeof(sealed));

memset(unsealed, 0, sizeof(unsealed));

int sealed_len = 0, unsealed_len = 0;

std::string plaintext("Plaintext");

if (islet_seal((const byte*)plaintext.c_str(), plaintext.size(), sealed, &sealed_len))

return -1;

if (islet_unseal(sealed, sealed_len, unsealed, &unsealed_len))

return -1;

printf("Success sealing round trip.\n");

SDK Software Design Document

Table of Contents

- SDK Introduction

- System Architecture

- APIs and Interfaces

- Functional Requirements

- Non Functional Requirements

- Testing Strategy

- Conclusion

1. SDK Introduction

Overview

This Software Design Document outlines the architecture and functionality of the SDK, which provides confidential computing features for realm developers. The SDK aims to offer a set of tools and APIs that enable those features within realms. The SDK encompasses the following key features:

- Attestation

- The ability to verify the authenticity and integrity of data, actions, or entities within realms

- Established this feature securely with our own HES(Hardware enforced security)

- Secure Channel

- Securely data transmission that is resistant to overhearing and tampering

- Collaborated with the multi-part C.C. Framework

- Sealing

- Securely storing and protecting sensitive data to prevent unauthorized access and tampering

- Provided this feature in a simulated version

Limitation

SDK provieds sealing features only simulated version.

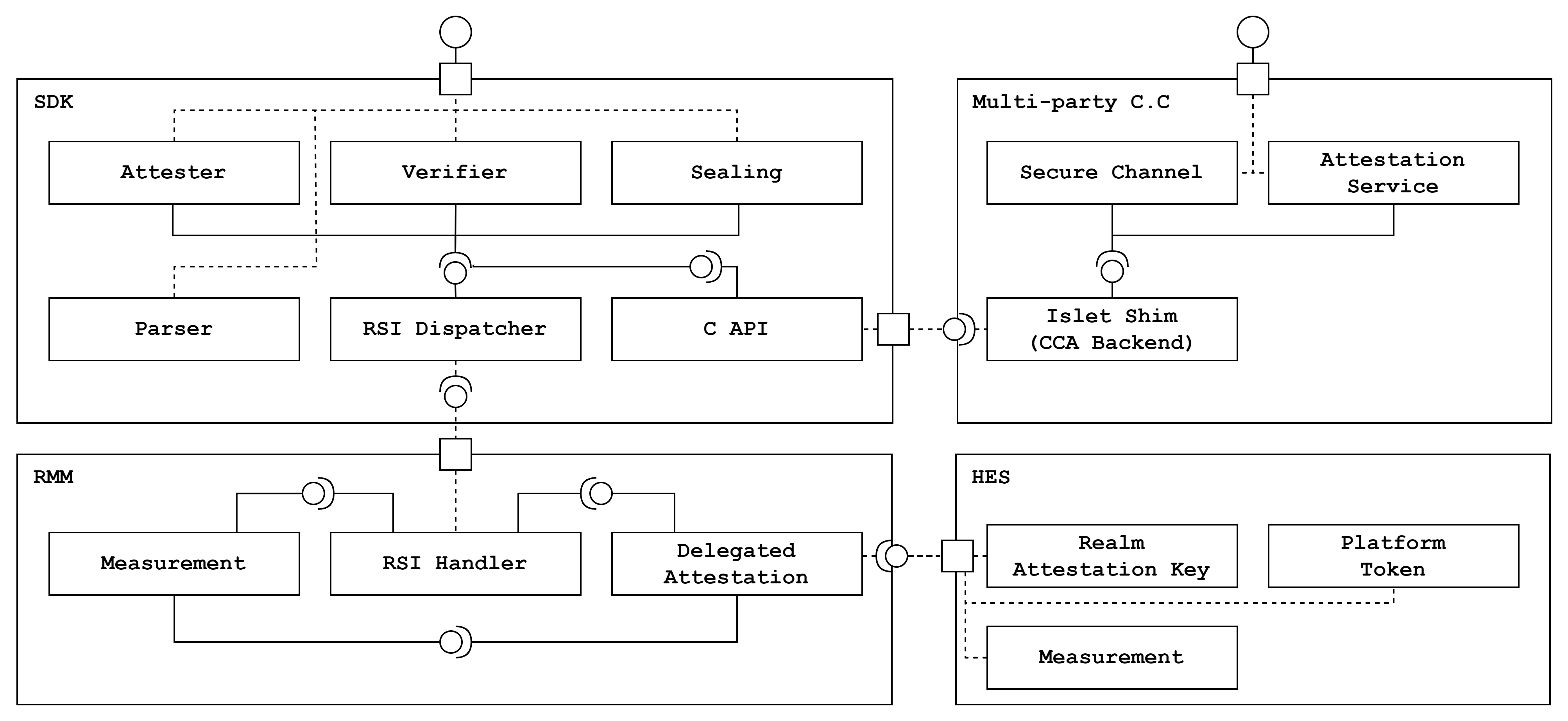

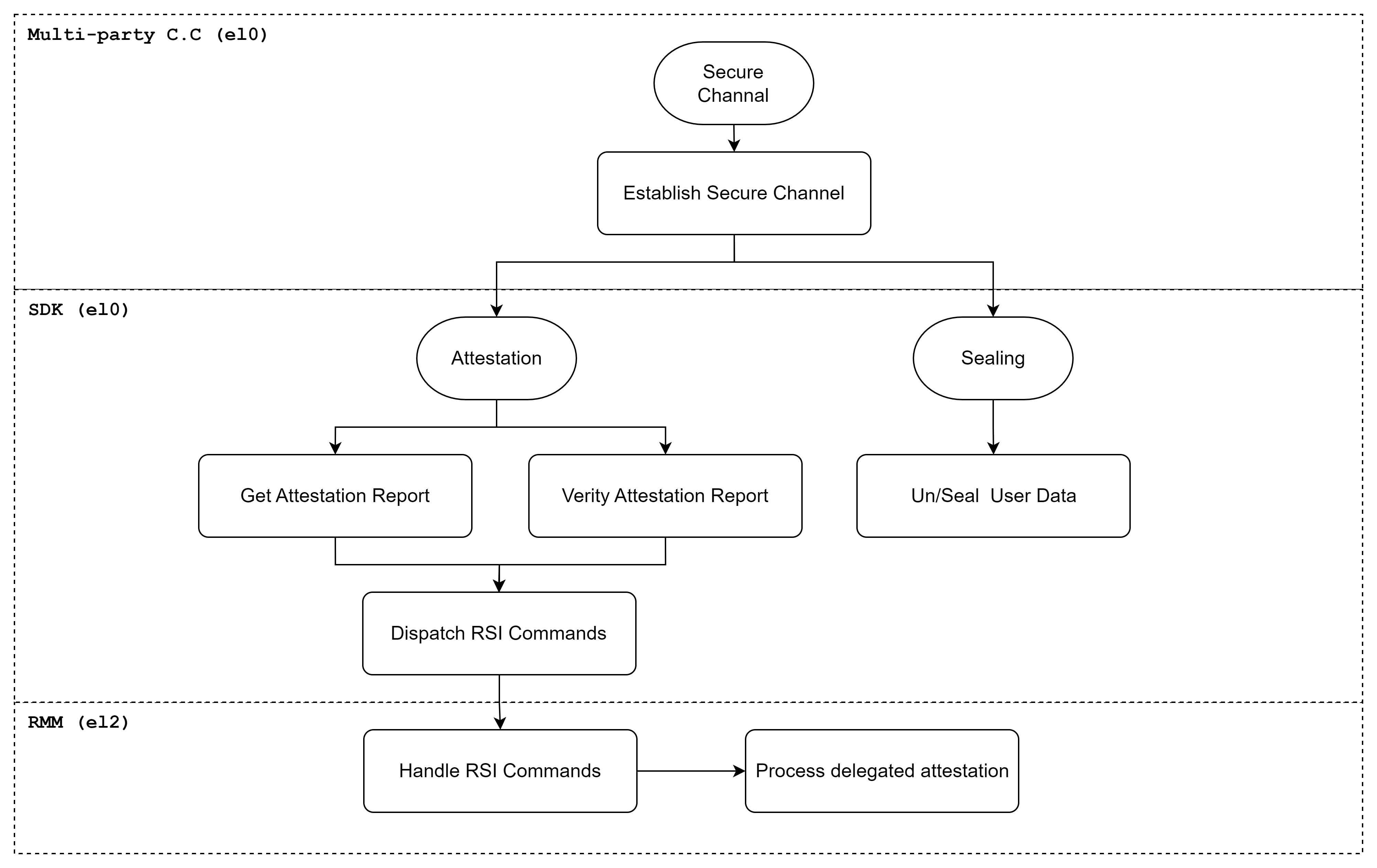

2. System Architecture

Component Structure

The SDK follows a modular architecture. The architecture comprises:

- Attester Module: Retrieves the attestation report generated by RMM.

- Verifier Module: Incorporates the logic for the realm token and platform token verification.

- Parser Module: Provides parsing specific claims of the attestation report.

- Sealing Module: Implements encryption functions in compliance with the multi-part C.C. framework

- C API Module: Provides C APIs for the multi-part C.C. Framework to achieve secure channel functionality.

- RSI Dispatcher Module: Dispatches RSI commands to RMM and get results from RMM

To support secure channel

the C API module exposes attester, verifier and sealing modules to the multi-part C.C framework.

Islet SDK interacts with several components to provide C.C. primitives securely.

The detailed SDK component and connector view is shown below.

NOTE: Delegated attestation module of Islet RMM works in progress. SDK uses the reference RMM now, which means SDK is loosely coupled with RMM.

Security Considerations

The SDK complies with the Arm CCA RMM specification and the CCA Security Model. According to the recommendation, Security Model R0004, SDK utilizes our own HES-enabled system.

3. APIs and Interfaces

The SDK provides the following interfaces:

- Rust APIs for attestation and sealing

- C APIs to multi-part C.C. framework for secure channel

- Low-level interfaces to RMM for the RSI dispatcher

The realm developers can use Rust APIs and C APIs directly for attestation and sealing. The realm developers can use multi-part C.C. framework for secure channel. Low-level interfaces are abstracted to communicate between RMM and SDK internally.

The below diagram shows how API calls flow.

4. Functional Requirements

The SDK defines functional requirements to provide C.C. primitives. Function requirements cover Rust APIs, CAPIs and internal interfaces. The APIs should be tested using Testing Strategy.

| No | Name | Description |

|---|---|---|

| FR1 | Attestation Report Generation | The SDK must provide methods to get a CCA attestation report |

| FR2 | Attestation Report Verification | The SDK must provide methods to verify a CCA attestation report |

| FR3 | Attestation Report Parser | The SDK must provide methods to parse a CCA attestation report |

| FR4 | Simulated Attestation Report Generation | The SDK must provide methods to get a CCA attestation report (simulated ver x86_64) |

| FR5 | Simulated Attestation Report Verification | The SDK must provide methods to verify the CCA attestation report (simulated ver x86_64) |

| FR6 | Simulated Sealing | The SDK must provide methods to seal user data (simulated on x86_64) |

| FR7 | Simulated Unsealing | The SDK must provide methods to unseal sealed user data (simulated on x86_64) |

| FR8 | Secure Channel | The SDK must provide methods to secure channel using multi-part C.C framework |

| FR9 | RSI Dispatcher | The SDK must provide methods to dispatch RSI commands to RMM |

5. Non-Functional Requirements

The SDK defines non functional requirements as compliance standards. The standards include the CCA RMM spec, the CCA Security Model and the multi-part C.C framework.

| No | Name | Description |

|---|---|---|

| NFR1 | Compliance | Adheres to the Arm CCA specification |

| NFR2 | Unit Testing | Comprehensive unit testing for all SDK components |

| NFR3 | Deployment | Provides guidelines for deploying the SDK to multi-part C.C framework |

| NFR4 | RMM Independency | Makes loose coupling with RMM to support other spec compliant RMM |

6. Testing Strategy

The SDK provides our own unit tests for Rust APIs(Attestation and Sealing). The SDK provides the test code to the multi-party C.C framework for C APIs(Attestation and Sealing) and secure channel.

Attestation

- Components

- Islet SDK unit tests

- Multi-part C.C. framework test applications

- Related FRs

- FR1. Attestation Report Generation

- FR2. Attestation Report Verification

- FR3. Attestation Report Parser

- FR4. Simulated Attestation Report Generation

- FR5. Simulated Attestation Report Verification

- Validation methods

- Rust APIs should be passed by SDK unit tests on both x86_64 and aarch64 systems.

- C APIs should be worked with the multi-part C.C. framework on both x86_64 and aarch64 systems.

Sealing

- Components

- Islet SDK unit tests

- Multi-part C.C framework test applications

- Related FRs

- FR6. Simulated Sealing

- FR7. Simulated Unsealing

- Validation methods

- Rust APIs should be passed by SDK unit tests on the x86_64 system.

- C APIs should be worked with the multi-part C.C. framework on the x86_64 system.

Secure Channel

- Components

- Islet SDK unit tests

- Multi-part C.C Framework test applications

- Multi-part C.C Framework attestation service

- Related FRs

- FR8. Secure Channel

- Validation methods

- C APIs should be worked with the multi-part C.C. framework on both x86_64 and aarch64 systems.

7. Conclusion

This Software Design Document provides a comprehensive overview of the SDK's architecture, functionality, functional and non-functional requirements for utilizing attestation, sealing and secure channel features on realms.

Attestation

Remote Attestation (RA) is the key of confidential computing platform, which is basically a method that convinces to verifier that a program (attester) is running on a proper confidential computing platform (e.g., SGX or ARM CCA). Unfortunately, at the beginning of ARM TrustZone which has been widely adopted by mobile device vendors up to this date, it lacks the support of RA in the form of a specification. Some research papers (e.g., SecTEE) have proposed a method to bring RA into TrustZone. However, due to the lack of standardization, RA comes in vendor-specific forms.

To address this problem, ARM CCA has been designed from the beginning, having RA in mind, and comes with a document (ARM CCA Security Model). On top of it, the attestation token format and the architecture described in that document align well with Remote ATtestation procedureS (RATS) specification, which is in active development to standardize RA stuff. This is a good thing as it implies that RA of CCA is not tightly coupled with a specific protocol, rather can connect to any attestation protocol.

Report

An attestation report (shortly, report) is an evidence produced by attester and consumed by verifier. In ARM CCA, report consists of two different tokens:

- CCA Platform token: it is used to assure that attester is running on a secure CCA platform. It covers the measurements of CCA platform components (e.g., RMM and EL3M) and whether it is in debug state.

- Realm token: this token is used to hold the measurement of Realm, which is equivalent to a virtual machine that may contain kernel and root file system.

You can quickly test and see what this report looks like through our CLI tool.

Appraisal policy

According to RATS, there is a term named Appraisal Policy (shortly, Policy), which is central to how to build a real-world service on top of RA. You can basically think of Policy as a set of rules that verifier wants to enforce. For example, what tokens in a report say is basically sort of measurements signed by secure cryptographic keys. So, to build a meaningful security service around it, you have to write down Policy like "(1) Realm must have the measurement of X, (2) the measurement of CCA Platform software must be Y".

As you may notice, managing Policy is out of scope of CCA as this is inherently not dependent on CCA. Instead, there are several open-source projects that take on this capability, for example, Veraison and Certifier Framework. They all aim to implement a standardized way to express and enforce Policy. (note that they sometimes are treated as a unified verification service as they are able to work across various TEEs)

Islet might be able to work with any verification service in theory, but currently bases the ability to handle Policy on Certifier Framework. You can see further details here on what Certifier Framework is and how Islet uses it.

Certifier framework

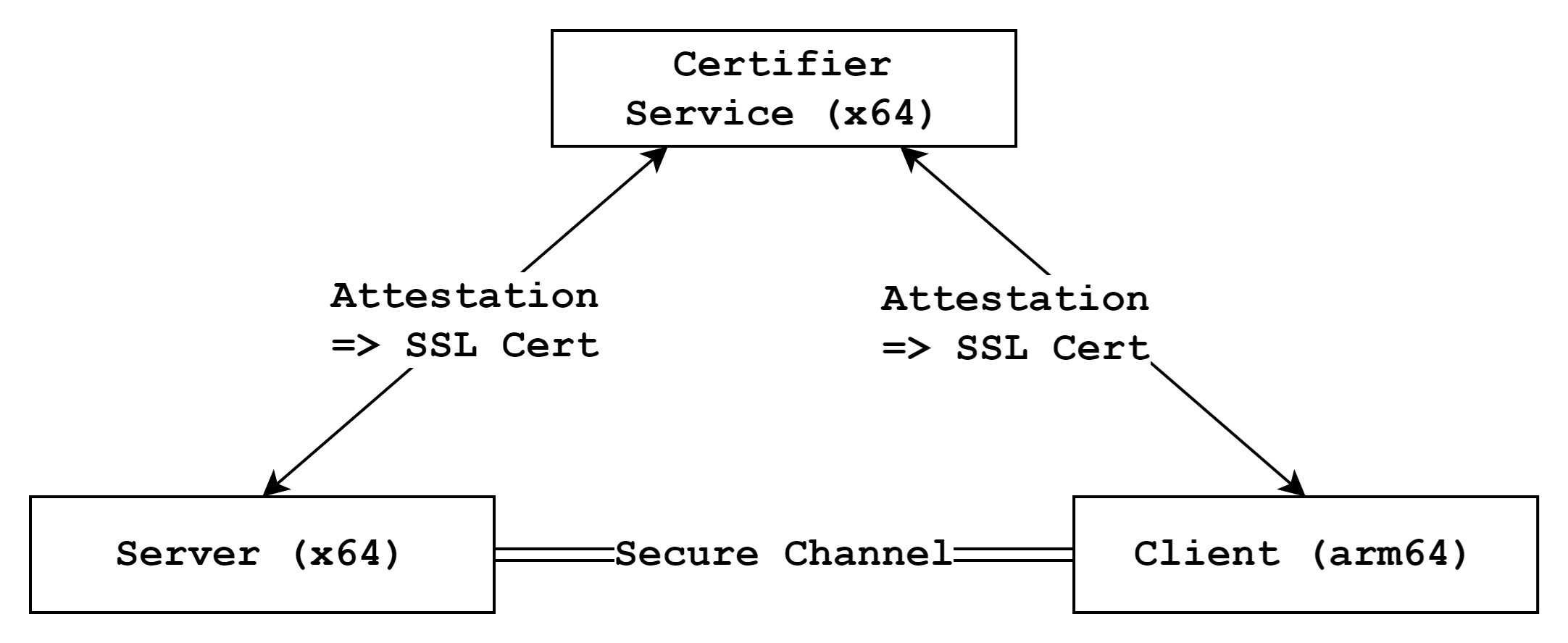

Certifier framework is a framework designed to bring the ability to handle Policy into reality in an TEE-agnostic way. It offers a set of client APIs that can plug into various TEEs including Islet (ARM CCA) and server called Ceritifer Service, which takes the role of verifying attestation reports that come from various TEEs. Islet currently adopts this framework to realize end-to-end confidential services that are likely to involve two or more different TEEs.

What we can do with Certifier Framework

To get what we can do with Certifier Framework, we want to show you a simple example in which client and server want to communicate through a secure channel authenticated by confidential computing platforms. There are three components involved-- certifier service which this framework offers by default, client which runs on CCA, and server which runs on SGX or AMD SEV. The goal of the certifier framework is to allow only pre-defined applications to pass authentication, and thus block malicious applications in the first place.

The first thing we need to do to build this service is to write down Policy, that is to say, embedding a set of claims into a format called Policy. The Policy would look like this in verbal form:

- The measurement of application trying to join this service must be one of Client_Measurement and Server_Measurement.

- Applications trying to join this service run on a secure confidential computing platform.

After making an agreement on the policy, authentic client and server are going to be launched and generate an attestation report and send it to the certifier service. And then, the certifier service verifies that report based on the policy, in other words, verifying if that report doesn't violate any claims in the policy. Only if properly verified, the certifier service issues admission cert which is going to be used to build a secure channel between client and server. From that point on, they can trust each other and send messages to each other securely.

A more complex example

For a more realistic case, we've built Confidential ML on top of the certifier framework. See this page to know what it is in more depth.

Contents

Secure interactions with the Host

In most TEEs, interacting with the host (or the non-secure environment) is the most error-prone part as the host can pass anything for malicious purposes. For example, the paper named A Tale of Two Worlds demonstrated that many TEE SDKs made some mistakes while implementing such interfaces. Also, it's not trivial to mitigate Iago attacks that most TEEs inherently are affected by.

As Islet aims to bring the best level of security, we take those problems seriously and try to tackle them through the syntax of Rust. This page shows the way we're doing that aspect.

Secure host memory access

In ARM CCA, for some cases, RMM needs to map host memory and read/write something from/to that memory.

For example, when RMI_REALM_CREATE is invoked, RMM has to get a physical memory address where parameters are placed at

and read them from that memory.

These accesses must be securely done as insecure implementations may open things up for attackers to break ARM CCA.

We use copy_from_host_or_ret! and copy_to_host_or_ret! as a means of secure host memory access.

#![allow(unused)] fn main() { listen!(mainloop, rmi::REALM_CREATE, |arg, ret, rmm| { let rmi = rmm.rmi; let mm = rmm.mm; // key arguments // -- Params: the type of what the host passes // -- arg[1]: a physical address that points to where we should read from let params = copy_from_host_or_ret!(Params, arg[1], mm); // ... use params ... } }

What these two macros do is,

- do per-struct security checks and map host memory into RMM only if it passes all checks.

- copy from/to host memory to RMM stack memory that is bound to each CPU.

- unmap host memory

After it gets done, we can access params that reside in RMM memory, not host memory.

So it's secure against concurrency-based attacks such as double-fetch attacks.

If some additional security checks on some field value are needed (e.g., Params.hash_algo should be either 0 or 1),

you can do it via validate() in Accessor trait. The specified validation is called before RMM accesses host memory.

#![allow(unused)] fn main() { impl HostAccessor for Params { fn validate(&self) -> bool { if self.hash_algo == 0 || self.hash_algo == 1 { true } else { false } } } }

RMI/RSI command validation

In ARM CCA, each RMI/RSI command has a different number of input/output parameters. So we need to take special care in accessing such parameters.

To catch any mistakes regarding this in advance, Islet developers must explicitly define Constraint as follows.

#![allow(unused)] fn main() { // (1) define RMI/RSI constraints lazy_static! { static ref CONSTRAINTS: BTreeMap<Command, Constraint> = { // This line says that RMI_DATA_CREATE has 6 input arguments and 1 output argument. m.insert(rmi::DATA_CREATE, Constraint::new(rmi::DATA_CREATE, 6, 1)); } } // (2) check defined constraints at runtime listen!(mainloop, rmi::DATA_CREATE, |arg, _ret, rmm| { // when you access arg[0], nothing happens because it doesn't cause an out-of-bound access. let target_pa = arg[0]; // but, if you access arg[7], run-time panic occurs as this RMI command only has 6 arguments. // you can catch this error in the testing phase and fix it in advance. let xxx = arg[7]; } }

Contents

Confidential machine learning with Islet

Introduction

In order for traditional machine learning (ML) to work, data provider (e.g., mobile device) would have no choice but to give their data to server which offers the ability to run ML operations (inference and training). It apparently raises an issue of user data privacy because data provider might want to keep their data local.

Federated learning (FL), also known as privacy-preserving machine learning, has come to the rescue of this privacy issue by the concept of on-device training. More technically, in this way, it would no longer send user data to server but instead send model parameters derived as a result of on-device training.

On one hand, federated learning is a good thing for security, but on the other hand, practical issues are remaining, which hinder the wide adoption of it. The thing is, a lot of ML services have already been built over traditional ML, so it's never going to be easy to turn them into a federated learning compatible form. Some of them might want to stick to traditional ML as security could not be a big priority.

On top of it, there are still security issues remaining even when you use federated learning. For example, say that you are working for an AI company specializing in model development and your AI models have to be treated as private assets. But for machine learning to work, you have to send your model down to devices and trust they do not see or reveal your model. Also, there have been academic papers that demonstrate it's still possible to learn something about user data through adversarial ML attacks even in the federated learning setting (e.g., paper-1, paper-2).

These problems mentioned by far have led us to try to find out a practical yet general solution that can cover every type of machine learning (from traditional to federated). And that solution is what we call confidential machine learning (shortly, confidential ML), which is based on trusted execution environments (TEE). Admittedly, Confidential ML is not new and many companies working on TEE already have services that offer confidential ML but to some limited extent. Specifically, what most companies are caring about is only server-side aspects and device-side aspects have not been seriously considered, and thus they all fail to build an end-to-end confidential ML service.

In this article, we're going to explore how Islet makes a unique addition to confidential ML to accomplish a real end-to-end confidential ML service, ranging from traditional ML to federated learning.

Issues in traditional ML

In traditional ML, there are two kinds of privacy leaks:

- data leak: devices have to send data in order for servers to do ML operations including inference and training. In this case, those servers can see user data without permission.

- model leak: to reduce latency and retrieve an ML outcome quickly, devices can download an ML model from servers and do on-device inferences using ML frameworks such as TensorFlow lite. In this case, devices can see an ML model that should be treated as secret to servers.

While most confidential computing platforms are targeting cloud servers (e.g., Intel SGX and AMD SEV), most ML clients come from mobile devices where confidential computing is out of reach at the time of this writing. Of course, most mobile devices based on ARM have TrustZone, which is one instance of TEE, but it is typically not allowed for 3rd party applications to get protected by TrustZone as it is built for vendor-specific confidential information.

The problem is, while data leak can easily be eliminated by running servers on confidential computing platforms such as Intel SGX or AMD SEV, we have no way to protect model leak against malicious or honest-but-curious devices. And this cannot be solved without the aid of a device-side confidential computing platform.

Islet for traditional ML

Islet can tackle the above privacy issues that traditional ML has, by extending confidential computing to mobile devices. There are three different components involved in this confidential traditional ML: (1) model-provider, (2) device (data-provider), (3) runtime.

model-provider is the owner of AI models and wants to prevent any other components from seeing their models that contain confidential information. device represents mobile devices and thus sends local data to runtime to benefit from ML services. We assume device here belongs to a specific vendor like Samsung. Lastly, runtime provides ML functionalities and acts as a server. It takes data from device and model from model-provider and does actual ML stuff. In this setting, runtime and model-provider can be running on confidential computing platforms that public cloud vendors such as Azure or Google cloud provide, while device can be running on Islet which is based on ARM CCA.

Roughly thinking, putting everything into the scope of confidential computing suffices to solve both data leak and model leak. But this holds true only if it's assumed that those three components mutually trust each other. For example, runtime expects device to keep their AI models in Islet and not to reveal them anywhere else. But, device can freely break that assumption and take AI models out of Islet with the intent of uncovering how their models work, which raises model leak.

To prevent this from happening, we need one more assumption that all codes of those three components are open-sourced and therefore everyone can see and audit them. If runtime is programmed to keep user data local and it is open-sourced, we can assure that in no circumstances will data leak happen. It is the same in model leak. To check if a program that is about to launch matches what we expect (e.g., runtime that doesn't leak user data), we need to take the measurement of that program and associate that with the attestation process. We use Certifier framework to realize this verification as that framework is designed to work across various TEEs in a unified manner, satisfying our requirement.

Issues in federated learning

As mentioned earlier, in federated learning, someone may think data leak will not happen as training is done on the device side, and thus user data never leaves devices. But this assumption has turned out to be wrong as several attacks have demonstrated that malicious servers (or honest-but-curious servers) can infer user data from model parameters (i.e., weights). For example, this paper proposed an attack that extrapolates what data was used in on-device training from model parameters and successfully demonstrated it could recover some images used. (which is called inversion attack)

There is another kind of attack, which is called inference attack, that malicious devices can launch. A malicious device might want to know what data is used in another device. In federated learning, each device can download new global models, which reflect training results from all devices. This means that a newly downloaded model has information about data from other devices, which is what a malicious device can exploit for inference attacks. By doing a comparison between two global models in a row, a malicious device can learn something about another device's data, for example, whether an image used in training includes someone on glasses or not.

One more interesting attack is what is called poisoning attack. As the name suggests, some devices can train with a large number of fake data in order to poison a global model in attackers' favor. For example, attackers might want to induce a victim to visit a specific place in order to leak the victim's private information in person. To do so, they can generate tons of data that would lead AI models to recommend visiting a specific place no matter what users ask.

Islet for federated learning

To stop the aforementioned attacks (inversion attack, inference attack, and poisoning attack), we can take the same approach as we did with traditional ML. The only difference would have to do with runtime as runtime servers would not do ML operations (training and inference) in federated learning. Instead, they do a so-called aggregation algorithm to build a new global model from local models that each device sends up.

For the former two attacks (inversion and inference), the open-source based security argument could still work in the same way. This is because, to launch them, attackers have to run programs that contain codes to do those attacks, leading to a measurement that is different than the allowed ones.

As for the last one (poisoning), it could not get protected in the same way as what actually breaks security comes from "data" not "program". In other words, even if device is authentic, a model could be trained with fake data, considering an attacker who is capable of taking control of "data" fed into the model. We see that this attack could be addressed to some extent by designing and implementing peripheral protections (e.g., building a secure channel from keyboard hardware all the way to a secure application) on top of CCA primitives.

Time to play around with real examples

Anyone can try out what we've explained so far, that is to say, running traditional ML or federated learning on top of Islet with simple ML models. Check out this markdown file to play around with Islet for confidential ML!

Cross-platform End-To-End Encryption (E2EE)

E2EE with attestation

E2EE is a type of communication security that ensures only the intended recipients can read the messages being sent and received. This example demonstrates how to integrate remote attestation validation into the E2EE establishment process using the Certifier Framework. Our example extends the framework's sample application to demonstrate E2EE between x64 and arm64 platforms.

Components

This example consists of three main components:

- Certifier Service: An x64 service responsible for performing attestation and checking against a pre-written policy

- E2EE Server App: An x64 application that requests attestation to the service and attempts to establish a secure channel with the client

- E2EE Client App: An arm64 application that requests attestation to the service and attempts to establish a secure channel with the server

Helper Scripts

We provide several helper scripts to simplify building and running the example:

- setup.sh: Installs required dependencies such as protobuf and gflags

- provisioning.sh: Configures the necessary measurements and policies for the service, server, and client

- build-{service, server, client}.sh: Builds each component separately

- run-{service, server, client}.sh: Starts each component separately

How to run this example

1. Install Dependencies

First, navigate to the examples directory and execute the setup script to install required dependencies:

$ cd $(islet)/examples/cross-platform-e2ee

$ ./setup.sh

2. Configure Measurements and Policies

Next, configure the necessary measurements and

policies by editing the provisioning.sh script and

specifying your own values for the following variables:

Those instructions are quoted from well-descripted document

You can get the server measurement with cli on the host machine:

$ cd $(islet)/cli

$ make x86_64

$ ./islet measurement-read --index 0

Simulated attestation operation on x86_64.

"580bd77074f789f34841ea9920579ff29a59b9452b606f73811132b31c689da9"

You also can get the client measurement with cli on the realm:

$ $(islet)/scripts/fvp-cca -nw=linux -rm=linux --hes

$ telnet localhost 5000

$ ./launch-realm.sh

# cd /shared

# insmod rsi.ko

# ./islet measurement-read --index 0

[ 2533.544864] rsi: device rsi open

[ 2533.664676] rsi: ioctl: measurement_read: 0

[ 2533.831443] rsi: device rsi released

"8a1d5cd26a0ee477067d18e2ff051687d18e1450b513508ba98910fa262b1fa3"

After getting measurments,

edit the script provisioning.sh.

# Set your measurements

SERVER_MEASUREMENT=580bd77074f789f34841ea9920579ff29a59b9452b606f73811132b31c689da9

CLIENT_MEASUREMENT=8a1d5cd26a0ee477067d18e2ff051687d18e1450b513508ba98910fa262b1fa3

Once you have edited the script, run it to generate the necessary keys and certificates:

$ ./provisioning.sh

...

...

3 blocks

1: Key[rsa, policyKey, b1a4dd71c6ea998df84b84689acb3b2eece897ca] says Key[rsa, policyAuthority, b1a4dd71c6ea998df84b84689acb3b2eece897ca] is-trusted-for-attestation

2: Key[rsa, policyKey, b1a4dd71c6ea998df84b84689acb3b2eece897ca] says Measurement[580bd77074f789f34841ea9920579ff29a59b9452b606f73811132b31c689da9] is-trusted

3: Key[rsa, policyKey, b1a4dd71c6ea998df84b84689acb3b2eece897ca] says Measurement[491cf94bdb951308672a839776359d6ac22808bad2d318226ef0ea2979693e2e] is-trusted

3. Run network enabled FVP

The client runs on the FVP, so you need to enable networking on FVP before starting the client. Follow these steps to do so: For more information about the network setting refer this.

$ cd $(islet)

$ ./scripts/fvp-cca --normal-world=linux-net --realm=linux --hes

Then, in the host Linux environment on FVP, launch the realm:

$ ./launch-realm.sh net

Finally, in the realm Linux environment on FVP, set the realm IP address and load the RSI kernel module:

You have to set current host machine time on realm linux. Because the service issues SSL certificates based host machine time to the server and the client

$ cd /shared

$ ./set-realm-ip.sh

$ date ${CURRENT_HOST_MACHINE_TIME} // ex) 122112002023

$ insmod rsi.ko

4. Build components

Now that you've configured everything, build the components using the provided build scripts:

// Compile the service

$ ./build-service.sh

// Compile the client

$ ./build-server.sh

// Cross-compile the client

$ ./build-client.sh

5. Run components

With all components built, you're ready to start them:

Open three separate terminal windows or tabs. In the first window, start the certifier service:

$ ./run-service.sh

In the second window, start the server app:

$ ./run-server.sh

In the third window, switch to the /shared directory on FVP and start the client app:

$ cd /shared

$ ./run-client.sh

That's it! Now you may see the server and client successfully establish an end-to-end encrypted connection.

Software attestation

Why does it matter?

Software attestation is the process of certifying that some program of interest possess certain properties. Typically, it boils down to checking whether the hashes of binaries match the values provided by the developers. Moreover, in the field of confidential computing it is useful to assert the trustworthiness of the environment our program is executing in. This is essential from a couple of perspectives:

- As a software engineering company you can ensure that the virtual machines that will be running the program aren't malicious and weren't modified by a third party.

- As a user you can be sure that the secrets used to authenticate and provide encryption layer in the application are stored securely.

How does it work?

There are two ways of performing software attestation:

Local attestationwhere the root of trust is derived from hardware and the device is able to attest itself (this is yet to be implemented in islet).Remote attestationwhere the root of trust is partly provided by a third party server called thereliant partythat implements an attestation protocol (Veraison is such software).

In detail the protocol implemented by islet is based on the TLS protocol which handles the handshake and the attestation is implemented by the custom certificate creation and validation procedures.

In the simplest example, which is implemented by islet, the reliant party implements the TLS server with a self-signed root certificate and a custom certificate verifier.

The client in this case is a application running inside a secure realm. It implements the TLS client with the custom certificate generator that creates a self-signed certificate with the attestation token embedded in the optional field.

As a reminder, an attestation token is a set of claims provided by the execution environment that will be checked by the reliant party.

Additionally, to prevent replay attacks the server will generate a random challenge that the client is expected to embed inside the token. When the TLS 3-way handshake has been finished successfully, the software is attested and the opened TCP connection can be used to transfer sensitive data or other software.

Implementation details

Legend

Islet(this project) is a Realm Management Monitor implemented in Rust it is used to manage realms and generate attestation tokens in the upcoming ARMv9 architecture.RaTlsClientis the client of the modifiedTLSprotocol which uses a custom cert generator that embeds the attestation token.RaTlsServeris the server of the modifiedTLSprotocol which implements custom cert verifier that usesVeraisonandRealm measurementsto attest the software running inside the realm.Verification serviceit's the actual service in theVeraisonproject responsible for attesting software, currently it only checks the platform part of the token.Realm measurementsis a data store holding the trusted realm measurements, so that theRaTlsServercan check the realm part of the token (as mentionedVeraisononly attests the platform part).

Attestation flow

- The attestation is initiated by the

RaTlsClientcreating aTCPconnection to theRaTlsServerand starting theTLS3-way handshake. - The

RaTlsServersend a challenge to the client (it's a 64bit number used to protect against replay attacks). - The

RaTlsClientprovidesIsletwith the challenge and retrieves a signed attestation token containing:- platform measurements (bootloaders measurements,

Isletmeasurements itself, etc...) - signature signed by the

CPAKorPlatform key - realm measurements

- realm challenge which is the challenge we got from

RaTlsServer - signature signed by

RAKorRealm attestation key

- platform measurements (bootloaders measurements,

- The

RaTlsClientcreates a self-signedSSLcertificate with the token embedded as aX509extension and provides it to the server. - The

RaTlsServerextracts the token from the certificate and validates the challenge. - Next it uses

Verification serviceto validate the platform part of the token. - At last the

Realm measurementsare used to check the realm part. - If all check finish successfully, the 3-way handshake concludes and an encrypted

TCPconnection between theRaTlsClientandRaTlsServeris opened and ready to transfer sensitive data.

For more detailed instruction refer to RUN. It contains a step by step guide of running the attestation demo using Islet.